Researchers said that autopilot systems used by popular cars – including the Tesla Model X – can be fooled into detecting fake images, projected by drones on the road or on surrounding billboards, as real. Attackers could potentially leverage this design hole to trigger the systems to brake or steer cars into oncoming traffic lanes, they said.

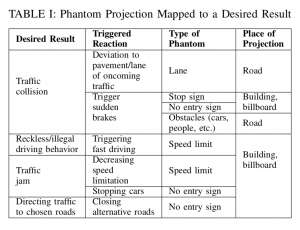

The issue stems from advanced driving assistance systems (ADAS), which are used by semi-autonomous vehicles to help the vehicle driver while driving or parking. By detecting and reacting to obstacles in the road, ADAS systems are designed to increase driver safety. However, researchers said that they were able to create “phantom” images purporting to be an obstacle, lane or road sign; use a projector to transmit the phantom within the autopilots’ range of detection; and trick systems into believing that they are legitimate.

“The absence of deployed vehicular communication systems, which prevents the advanced driving assistance systems (ADASs) and autopilots of semi/fully autonomous cars to validate their virtual perception regarding the physical environment surrounding the car with a third party, has been exploited in various attacks suggested by researchers,” said a team of researchers from the Ben-Gurion University of the Negev in a post last week (they presented the research at Cybertech Israel conference in Tel Aviv last week).

To develop a phantom proof-of-concept attack, researchers looked at two prevalent ADAS technologies. The Mobileye 630 PRO (used in vehicles like the Mazda 3) and Tesla’s HW 2.5 autopilot system, which comes embedded in the Tesla Model X. On the scale of level 0 (no automation) to level 5 (full automation), these two systems are considered “level 2” automation. That means they support semi-autonomous driving by acting as an autopilot, but still require a human driver for monitoring and intervention. These systems use various depth sensors and video cameras to detect obstacles on the road within a range of 300 meters.

To create the attack, researchers simply developed an image to be projected – with no difficult technical requirements other than making the image bright and sharp enough to be detected by the ADAS technologies.

“When projecting images on vertical surfaces (as we did in the case with the drone) the projection is very simple and does not require any specific effort,” Ben Nassi, one of the researchers with the Ben-Gurion University of the Negev who developed the attack, told Threatpost.

“When projecting images on horizontal surfaces (e.g., the man projected on the road), we had to morph the image so it will look straight by the car’s camera since we projected the image from the side of the road. We also brightened the image in order to make it more detectable since a real road does not reflect light so well.”

Then, they projected these phantom images to nearby target vehicles, either embedded within advertisements on digital billboards, or via a portable projector, mounted on a drone. In one instance, researchers showed how they were able to cause the Tesla Model X to brake suddenly due to a phantom image, perceived as a person, projected in front of the car. In another case, they were able to cause the Tesla Model X’s system to deviate to a lane of oncoming traffic by projecting phantom lanes that veered toward the other side of the road.

Researchers said that phantom attacks have not yet been encountered in the wild. However, they warn that the attacks do not require any special expertise or complex preparation (a drone and a portable projector only costs a few hundred dollars, for instance) and, if the attacker is using a drone, the attacks can potentially be launched remotely.

Phantom attacks are not security vulnerabilities, researchers said, but instead “reflect a fundamental flaw of models that detect objects that were not trained to distinguish between real and fake objects.”

Threatpost has reached out to Tesla and Mobileye for further comment and has not yet heard back by publication.

Researchers said that they were in contact with Tesla and Mobileye regarding the issue via their bug bounty programs from early May to October 2019. However, they said that vendors said that the phantom attacks did not stem from an actual vulnerability in the system. “There was no exploit, no vulnerability, no flaw, and nothing of interest: the road sign recognition system saw an image of a street sign, and this is good enough, so Mobileye 630 PRO should accept it and move on,” researchers said Mobileye told them.

Tesla told researchers that it would not “comment” on the findings since some of the research involved utilized an “experimental” stop sign recognition system for the autopilot and had thus changed the configuration of the vehicle. “We cannot provide any comment on the sort of behavior you would experience after doing manual modifications to the internal configuration – or any other characteristic, or physical part for that matter – of your vehicle,” researchers said Tesla told them (the experimental code from the firmware has since been removed, researchers said).

Tesla told researchers that it would not “comment” on the findings since some of the research involved utilized an “experimental” stop sign recognition system for the autopilot and had thus changed the configuration of the vehicle. “We cannot provide any comment on the sort of behavior you would experience after doing manual modifications to the internal configuration – or any other characteristic, or physical part for that matter – of your vehicle,” researchers said Tesla told them (the experimental code from the firmware has since been removed, researchers said).

However, researchers said that while this “experimental” stop sign recognition system was utilized in a PoC to detect projected stop signs, “We did not influence the behavior that led the car to steer into the lane of oncoming traffic or suddenly put on the brakes after detecting a phantom,” they said. “Since Tesla’s stop sign recognition system is experimental and is not considered a deployed functionality, we chose to exclude this demonstration from the paper.”

Researchers for their part say that configuring ADAS systems so that they take into account objects’ context, reflected light, and surface would help mitigate the issue as it would provide better detection around phantom images.

Connected vehicles have long been a target for hacking, spearheaded by the 2015 hack of a Jeep Cherokee that enabled control of key functions of the car. Other vehicle-related attacks have stemmed from keyless entry systems to in-vehicle infotainment systems.