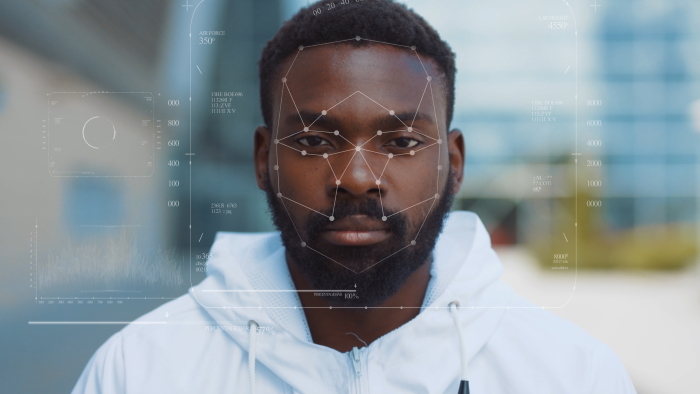

More than 1,000 technology experts and academics from organizations such as MIT, Microsoft, Harvard and Google have signed an open letter denouncing a forthcoming paper describing artificial intelligence (AI) algorithms that can predict crime based only on a person’s face, calling it out for promoting racial bias and propagating a #TechtoPrisonPipeline.

The move shows growing concern over the use of facial-recognition technology by law enforcement as well as support for the Black Lives Matter movement, which has spurred mass protests and demonstrations across the globe after a video went viral depicting the May 25 murder of George Floyd by a former Minneapolis police officer.

The algorithms are outlined in a paper written by researchers at Harrisburg University in Pennsylvania called “A Deep Neural Network Model to Predict Criminality Using Image Processing” that is soon to be published by Springer Publishing based in Berlin, Germany. The paper describes an “automated computer facial recognition software capable of predicting whether someone is likely going to be a criminal,” according to a press release about the research.

The letter, published on the Medium content-sharing platform of the Coalition for Critical Technology, demands that Springer rescind the offer for publication as well as to publicly condemn “the use of criminal justice statistics to predict criminality.” Experts also are asking Springer to acknowledge its own behavior in “incentivizing such harmful scholarship in the past,” and request that “all publishers” refrain from publishing future similar studies.

While crime-prediction technology based on computational research in and of itself is not specifically racially biased, it “reproduces, naturalizes and amplifies discriminatory outcomes,” and is also “based on unsound scientific premises, research, and methods, which numerous studies spanning our respective disciplines have debunked over the years,” experts wrote in their letter.

They outline three key ways these type of algorithms are problematic and result in discriminatory outcomes by the justice system. The first refutes a claim in the press release about the paper that the algorithms can “predict if someone is a criminal based solely on a picture of their face,” with “80 percent accuracy and with no racial bias.” This is impossible because the very idea of “criminality” itself is racially biases, researchers wrote in the letter.

“Countless studies have shown that people of color are treated more harshly than similarly situated white people at every stage of the legal system, which results in serious distortions in the data,” researchers wrote.

The second point the letter makes cites a historical problem in the validity of AI and machine-learning that those in the field are rarely trained in the “the critical methods, frameworks, and language necessary to interrogate the cultural logics and implicit assumptions underlying their models.”

“To date, many efforts to deal with the ethical stakes of algorithmic systems have centered mathematical definitions of fairness that are grounded in narrow notions of bias and accuracy,” according to the letter. “These efforts give the appearance of rigor, while distracting from more fundamental epistemic problems.”

The third and final argument points out that any crime-prediction technology “reproduces injustices and causes real harm” to victims of a criminal-justice system that is currently under harsh scrutiny for its methods and practices.

The authors of the Harrisburg University study claim that their algorithms will provide “a significant advantage for law enforcement agencies and other intelligence agencies to prevent crime,” according to the press release.

However, these are the agencies currently coming under fire for their use of unnecessary racial profiling and force, as well as the advantage they have historically had over those being accused of crimes, and therefore don’t need more of the same, experts said.

“At a time when the legitimacy of the carceral state, and policing in particular, is being challenged on fundamental grounds in the United States, there is high demand in law enforcement for research … which erases historical violence and manufactures fear through the so-called prediction of criminality,” they wrote in the letter.

Indeed, the use of facial recognition technology in general has always been controversial, and it’s current facing new legal, ethical and commercial challenges, including to its use by law enforcement. Microsoft recently joined Amazon and IBM in banning the sale of facial recognition technology to police departments and pushing for federal laws to regulate the technology.

Meanwhile, the COVID-19 pandemic also has renewed fears about the use of facial-recognition technology as it pertains to contact-tracing and other ways authorities attempt to stop the spread of the virus. Privacy experts worry that in the rush to implement virus-tracking capabilities, important and deep rooted issues around data collection and storage, user consent, and surveillance will be ignored.

Insider threats are different in the work-from home era. On June 24 at 2 p.m. ET, join the Threatpost edit team and our special guest, Gurucul CEO Saryu Nayyar, for a FREE webinar, “The Enemy Within: How Insider Threats Are Changing.” Get helpful, real-world information on how insider threats are changing with WFH, what the new attack vectors are and what companies can do about it. Please register here for this Threatpost webinar.