Google is unveiling new privacy controls for the Google Assistant virtual assistant, after the company came under fire earlier this year for eavesdropping on users’ personal audio snippets – without their permission.

The tech giant on Monday promised more transparency around the audio data that it collects, more user control over audio privacy preferences, as well as greater “security protections” around the audio collection process. Google also said it will take steps to minimize the audio data that it stores, by automatically deleting audio data that’s older than a few months.

“Recently we’ve heard concerns about our process in which language experts can listen to and transcribe audio data from the Google Assistant to help improve speech technology for different languages,” said Nino Tasca, senior product manager with Google Assistant in a Monday post. “It’s clear that we fell short of our high standards in making it easy for you to understand how your data is used, and we apologize.”

The improved privacy steps come on the heels of a July report by Dutch news outlet VRT NWS, which said it obtained more than one thousand recordings from a Dutch subcontractor who was hired as a “language reviewer” to transcribe recorded audio collected by Google Home and Google Assistant, and help Google better understand the accents used in the language.

Out of those one thousand recordings, 153 of the conversations should never have been recorded, as the wake-up command “OK Google” was clearly not given, the report said. Disturbingly, the subcontractor was able to access highly personal audio of domestic violence and confidential business calls — and even some users asking their smart speakers to play porn on their connected mobile devices.

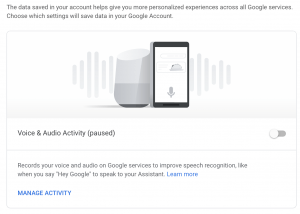

Google at the time argued that its language experts only review around 0.2 percent of all audio snippets. In addition, it argued, audio recordings are not retained by default – users would need to opt in by turning on the Voice & Audio Activity (VAA) setting.

Regardless, the incident drew public backlash, and Google paused the program to investigate. Now, Google said that it is resuming the program, with several setting updates meant to give more transparency and user control over its Google Assistant data collection policies.

For one, Google has updated its audio privacy policy so that when users turn on the VAA setting, it now clearly states that human reviewers may listen to Google Assistant audio snippets to help improve speech technology. Google also said that it will also add “greater security protections” to the process of contractors listening to audio snippets, “including an extra layer of privacy filters.” Audio snippets continue to not be associated with any user accounts, it said.

Google is also updating its policy to “vastly reduce the amount of audio data we store.” That includes automatically deleting the “vast majority” of users’ audio data that is older than a few months. Google said that this specific policy will be coming later this year.

Finally, Google will also give users more control to reduce “unintentional activations,” so that Google Assistant won’t accidentally be prompted – and contractors won’t hear audio that wasn’t meant to be heard.

“Soon we’ll also add a way to adjust how sensitive your Google Assistant devices are to prompts like ‘Hey Google,’ giving you more control to reduce unintentional activations, or if you’d prefer, make it easier for you to get help in especially noisy environments,” said Tasca.

In the past year, Amazon, Microsoft, Facebook and Apple have all come under increased scrutiny about how much audio data they collect, what that data is, how long it’s being retained and who accesses it. Amazon for instance has acknowledged that it retains the voice recordings and transcripts of customers’ interactions with its Alexa voice assistant indefinitely.

And, in response to the privacy outcry, several impacted tech giants beyond Google have scrambled to amend their voice assistant privacy policies. Apple in August announced that it was taking steps improve the privacy of audio collected by its Siri voice assistant – including bringing the process in-house and allowing users to opt out of the process (the move came on the heels of backlash around a program that let contractors listen into Siri conversations).

Moving forward, “we believe in putting you in control of your data, and we always work to keep it safe,” said Google. “We’re committed to being transparent about how our settings work so you can decide what works best for you.”

Interested in the role of artificial intelligence in cybersecurity, for both offense and defense? Don’t miss our free Threatpost webinar, AI and Cybersecurity: Tools, Strategy and Advice, with senior editor Tara Seals and a panel of experts. Click here to register.