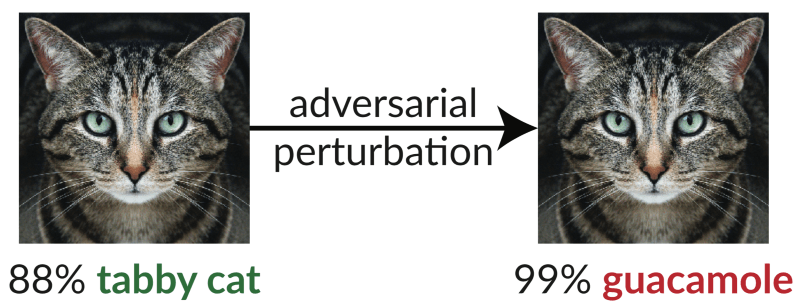

SAN FRANCISCO – The same machine-learning algorithms that made self-driving cars and voice assistants possible can be hacked to turn a cat into guacamole or Bach symphonies into audio-based attacks against a smartphone.

These are examples of “adversarial attacks” against machine learning systems whereby someone can subtly alter an image or sound to trick a computer into misclassifying it. The implications are huge in a world growing more saturated with so-called machine intelligence.

Here at the RSA Conference, Google researcher Nicholas Carlini gave attendees an overview of the possible attack vectors that could not only flummox machine-learning systems, but also extract sensitive information from large data sets inadvertently.

Neural Network Abuse

Underlying each of these attacks are the massive data sets that are used to help computer algorithms recognize patterns. One example Carlini shared with attendees was a system that classified millions of images of cats. Thanks to machine learning, the computer recognized the characteristics of a cat so well that when presented with an image of a dog, it could confidently determine the image was a mismatch.

But, Carlini said that data scientists have found exploitable weaknesses in the way computers handle large data sets used in machine learning. Data scientists call these data sets, when used with machine learning, neural networks – systems that mimic the way a brain works.

“These two images [of cats], which look essentially indistinguishable to all of us as humans, are completely different objects from the perspective of a neural network,” he said. The only difference between the two are small dots on one of the images, which is enough to confuse the neural network to classify it as guacamole.

When it comes to using this technique in the context of an adversarial attack, Carlini said, consider the implications of a defaced stop sign understood by a self-driving car as a 45 mph speed limit sign.

Adversarial Audio Attack

“It’s important to note that this is not just a problem that exists with images, but also with audio,” he said.

Carlini then played an instrumental excerpt of a J. S. Bach symphony. Next, a voice-to-text analysis used by a neural network interpreted the audio as a Charles Dickens quote, “It was the best of times, it was the worst of times.” The audio sample had been manipulated to be interpreted as text.

Illustration of attack shows how given any waveform, adding a small perturbation makes the result transcribe as any desired target phrase.

Carlini’s examples, using 16th century composers and 18th century writers, have real implications in the 21st century. The Google scientist demonstrated, with a video, how garbled audio could be heard as instructions by an Android phone to open a specific app or a rogue website.

“It would be bad if I walked up to your phone and said, ‘forward your most recent email to me’ or ‘browse to some malicious website,'” he said. With the right camouflaged audio, an adversary could command an Android phone to do any number of risky tasks.

“I could just play some audio embed in a YouTube video, and now anyone who watches the video gets the command,” he said.

Low Bar to Attack

The attacks themselves aren’t that sophisticated or hard to carry out. They are based on the way neural networks classify images. Here is an oversimplified explanation.

Machine learning ranks images with a percent confidence to create a classifier group. For example, a clean, crisp image of a cat may have a 98 percent likelihood of being a cat to a machine-learning program, and be so classified. Add one imperceptible dot to the cat image and the neural network might drop the percentage likelihood it is a cat to 97.5 percent. With enough imperceptible changes (dots), the confidence of the neural network in identifying the cat is eroded. At a certain threshold, the neural network stops seeing the image as a cat and jumps to the next most likely image. In the example Carlini shared, the next closest was guacamole.

“It’s really not that hard to generate these types of attacks,” he said. He added that applying mathematics can help an adversary minimize the time it takes to create a deceptive image that can defy common sense.

“If you can calculate the derivative of the image and the neural network with respect to some loss function, you can more easily maximize how wrong the classification is,” he said.

Privacy of Training Data

As machine learning is adopted by more industries looking to use neural network intelligence to solve problems, some privacy risks are inherent, Carlini said. For example, hospitals, retailers and government could want to create a neural network based on a wide range of sensitive data sets.

Carlini warns if proper restrictions aren’t applied to those data sets, personal information could be extracted by an adversary who has only limited access to the actual data.

In this context, neural networks are used in predictive models for queries. For example, emails or text apps will often predict the next word you’ll use. When this is applied with a data set rich with sensitive information, it could inadvertently allow an adversary to query the database to leak information.

One example demonstrated was when someone started typing “Nicolas’ social security number is … ” the algorithm queried the data set and delivered the right answer via autocomplete. Other scenarios include data sets with cancer treatment data, credit card information and addresses.

“[Companies] should think carefully before using machine learning with training data. It is possible to pull private data, even when a user only has query access to the model,” he said.

The key to protecting information leaks, Carlini said, is first reducing “memorization” of data within a data set to what is essential for a task.

“The first reason that you should be careful about using machine learning is this apparent lack of robustness. Machine-learning classifiers are fairly easy to fool,” he said. In a world where computers can see a stop sign as a 45 mph sign and music is recognized as speech to control smart devices remotely, designers of neural networks should think carefully about how they build their systems, he said.