Threatpost Op-Ed is a regular feature where experts contribute essays and commentary on what’s happening in security and privacy. Today’s contributors are Dave Dittrich and Katherine Carpenter.

Reports of APT activities detail compromises spanning multiple organizations, sectors, industry verticals, and countries (over multiple years). According to MITRE: “it is becoming increasingly necessary for organizations to have a cyber threat intelligence capability, and a key component of success for any such capability is the information sharing with partners, peers and others they select to trust.” Sharing information can be a beneficial tool for people working in any organization because information from outside a particular organization can improve internal security.

Reports of APT activities detail compromises spanning multiple organizations, sectors, industry verticals, and countries (over multiple years). According to MITRE: “it is becoming increasingly necessary for organizations to have a cyber threat intelligence capability, and a key component of success for any such capability is the information sharing with partners, peers and others they select to trust.” Sharing information can be a beneficial tool for people working in any organization because information from outside a particular organization can improve internal security.

Some in the computer security industry call APT activities “attacks,” framing them in a way intended to elicit support for calls for a right of self-defense (using the highly charged and loosely defined term active defense). The more appropriate term, in our opinion, is compromise; specifically, compromise of the integrity, availability, and/or confidentiality of targeted organizations’ information and information systems.

The activities required to establish and maintain an assured state in the face of compromise include protection, detection, and response. The more aggressive acts fall along what Himma and Dittrich call the “Active Response Continuum” (and anyone incapable of clearly articulating and analyzing the ethical and legal implications of these actions should not be trusted to engage in the most extreme actions, let alone be granted exemptions from computer crime statutes in the name of “self-defense“).

If we “assume breach”—meaning accepting that compromise will occur and therefore the cycle of protection, detection, and reaction should be the norm, not some unforeseeable exception—then the prudent course of action is to use all available information to more effectively address compromise. This information comes in the form of Observables and Indicators of Compromise, described in the 2012 MITRE paper on their Structured Threat Information eXpression (STIX) framework.

Too often there is confusion about Observables and Indicators of Compromise (IOCs), stemming from a lack of knowledge, imprecision in language, or other simple human failings (sometimes purposeful, serving a narrow self-interested objective rather than efforts to improve the national security posture for the common good). Let’s start with MITRE’s definitions of these terms:

“Observables are stateful properties and measurable events pertinent to the operation of computers and networks. Information about a file (name, hash, size, etc.), a registry key value, a service being started, or an HTTP request being sent are all simple examples of Observables. […] Indicators are a construct used to convey specific Observables combined with contextual information intended to represent artifacts and/or behaviors of interest within a cyber security context. They consist of one or more Observables potentially mapped to a related TTP context and adorned with other relevant metadata on things like confidence in the indicator’s assertion, handling restrictions, valid time windows, likely impact, sightings of the indicator, structure test mechanisms for detection, suggested course of action, the source of the indicator, etc.”

MITRE also defines several interrelated terms that address higher-level constructs and organizational objectives: Incidents; Tools, Tactics, and Procedures (TTPs); Campaign, Threat Actor, and Course Of Action (COA).

Conflating Observables and IOCs, and improperly using Observables results in high rates of false-positive alerts, said Alex Sieira on the Infosec Zanshin blog. What are sold as “threat intelligence” feeds are often really Observables feeds, not “intelligence,” and should not be used as the sole basis for triggering alerts. The author’s conclusion in this blog is reasonable, but the things described—”IP address, domain names, and URLs mostly”—are actually Observables, not IOCs. The author explains you can’t simply alert on the presence of those Observables. He is implicitly calling for use of IOCs, which combine multiple Observables along with confidence levels, etc., to reduce the probability of false-positives.

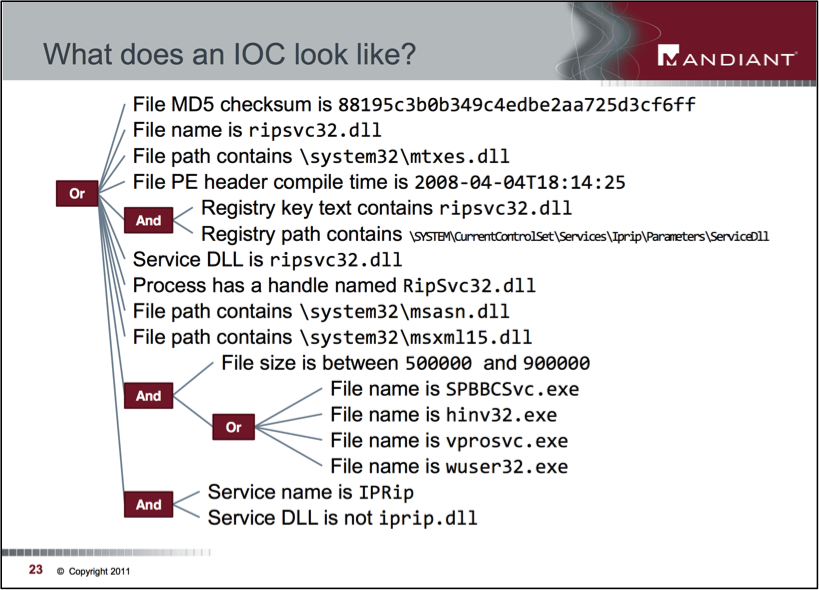

Let’s look at another example from Crowdstrike’s Jessica Decianno: “An IOC is often described in the forensics world as evidence on a computer that indicates that the security of a network has been breached.” There is no citation here, and it is not clear whether this definition from the “forensics world” has any bearing on the topic. Will Gragido also uses the term “forensic artifact,” but quickly explains IOCs are much more. Crowdstrike continues: “In the cyber world, an IOC is an MD5 hash, a C2 domain or hardcoded IP address, a registry key, filename, etc. These IOCs are constantly changing making a proactive approach to securing the enterprise impossible.” Again, by MITRE’s 2012 definition, these are clearly Observables, not IOCs. Ross and Breem even show a working example of an IOC (see, below) illustrating this linkage and how an IOC deals with “constantly changing.”

Crowdstrike’s Decianno colors IOCs with the terms “legacy” and “reactive,” associating the word “proactive” with a new idea called an “Indicator of Attack” that she claims is better than IOCs. The definition of “IOA” is muddy, but it includes “represent[ing] a series of actions that an adversary must conduct to succeed” and is followed by analogies to successful steps in committing crimes.

“In the cyber realm, showing you how an adversary slipped into your environment, accessed files, dumped passwords, moved laterally and eventually exfiltrated your data is the power of an IOA,” she wrote.

If we consider MITRE’s definition, these are clearly IOCs, combined with linked Incidents and TTPs, Campaign, and Threat Actor. The fact that they are collected and processed in real-time doesn’t make them different from IOCs. It just underscores the need to collect Observables, to combine and enrich them with other information at hand to improve their utility, and to help support “ongoing efforts to create, evolve, and refine the community-based development of sharing and structuring threat information,” Mitre said. Why try to invent a new term for an existing concept while deriding a commonly accepted and used term meant to improve overall cyber defense?

If the objective is truly to improve the national defense to a threat from “bad” actors, both foreign and domestic, by improving the effectiveness of protection, detection, and response to a long-running pattern of compromise activity, it is incumbent on all of us in this industry to speak clearly, consistently, and for the common good. To do otherwise raises questions about intention and integrity, and may actually hinder a common defense.

Dave Dittrich is a Computer Security Researcher in the Center for Data Science at the University of Washington Tacoma. He has been involved in investigating and countering computer crimes going back to the late-1990s, writing extensively on host and network forensics, bots and botnets, DDoS, computer research ethics, and the “Active Response Continuum.”

Katherine Carpenter (JD, MA) is a consultant researching ways to promote privacy and data security and improve the ethics behind computer security research. She previously worked in bioethics and health.