SAN FRANCISCO—Google may have sent the tired castle analogy of network security’s soft center protected by a tough exterior out to pasture for good.

On Tuesday at RSA Conference, Google shared the seven-year journey of its internal BeyondCorp rollout where it affirms trust based on what it knows about its users and devices connecting to its networks. And all of this is done at the expense—or lack thereof—of firewalls and traditional network security gear.

Director of security Heather Adkins said the company’s security engineers had their Eureka moment seven years ago, envisioning a world without walls and daring to challenge the assumption that existing walls were working as advertised.

“We acknowledged that we had to identify [users] because of their device, and had to move all authentication to the device,” Adkins said.

Google, probably quicker than most enterprises, understood how mobility was going to change productivity and employee satisfaction. It also knew that connecting to corporate resources living behind the firewall via a VPN wasn’t a longterm solution, especially for those connecting on low-speed mobile networks where reliability quickly became an issue.

The solution was to flip the problem on its head and treat every network as untrusted, and grant access to services based on what was known about users and their device. All access to services, Adkins said, must then be authenticated, authorized and on encrypted connections.

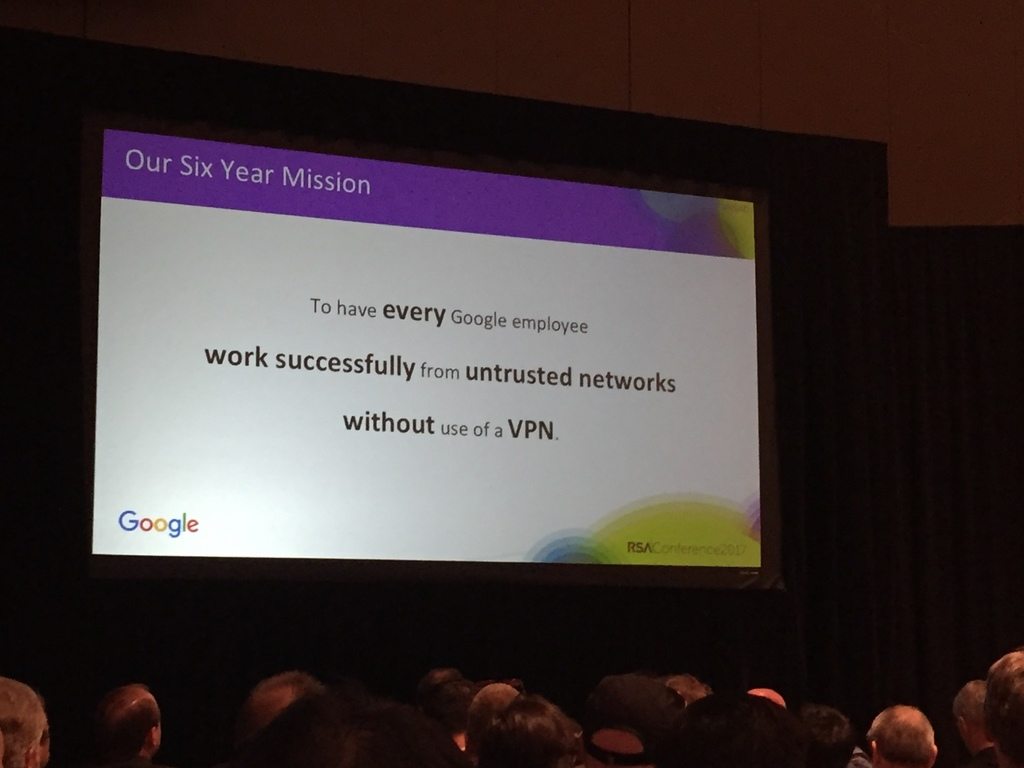

“This was the mission six years ago, to work successfully from untrusted networks without the use of a VPN,” Adkins said.

Implementing BeyondCorp required a new architecture, said Rory Ward, a site reliability engineering manager at Google, with a sharp focus on collecting quality data for analysis.

The first step was to inventory users and their roles as their careers at Google progress, essentially re-inventing job hierarchies, and assessing how and why they need to access internal services. The same intimacy was needed with respect to device information, requiring construction of a similar inventory system that tracks all devices connecting to services through its lifecycle. For the time being, Ward said, this applies to managed devices only, though in the future he hopes to extend this capability to user-owned private devices.

With that in place, Ward said Google engineers went to work building a dynamic trust repository that ingested data from more than two dozen data sources feeding it information about what devices were doing on the network. Policy files would describe how to define trust for a device and that would be done dynamically.

“The trust definition of a device can go up or down dynamically depending on what was done and what the policy says,” Ward said. “We have complete knowledge of users, devices and an indication of trust of every device accessing Google systems.”

Next, an access control engine was developed to enforce policy; it has the capability to ingest service requests along with user and device information and apply and enforce policy rules for accessing resources.

For example, Ward said, to access source code systems, one would have to be a full-time Google employee in engineering and using a fully trusted desktop.

This part of the rollout, Ward said, took two to three years to implement and brought Google closer to its goal of enabling access from anywhere.

The final part of the rollout, Adkins and Ward said, was the implementation phase. While the project had executive support, there was a caveat: Don’t break anything or anybody. This was a tall order given Google’s tens of thousands of internal users and devices and 15 years of assertions about a privileged network.

Ward said the expensive first step was to deploy an unprivileged and untrusted network in every one of Google’s approximately 200 buildings. Engineers grabbed samples of traffic from its trusted network and replayed it on the new untrusted network in order to analyze how workloads would behave. An agent was installed on every device in its inventory and every packet from those devices was also replayed on the new network to see what would fail as unqualified. This was a two-year process as well, and as it turned out, the project successfully chugged ahead to its full implementation.

“We managed to move the vast majority of devices, tens of thousands of devices and users, onto the new network and did not manage to break anybody,” Ward said.

Adkins said that earning executive support required making convincing arguments about this initiative making IT simpler, less expensive, more secure and employees happier and more productive.

“Clear business objectives are compelling to executives,” Adkins said. “We went from location-based authentication and knowledge-based authentication that relies on quality data. Accurate data was the key to be able to make this thing work.”