I recently read a blog post by CloudFlare and Shawn Graham that asked a fantastic (and timely) question: “Do Hackers Take The Holidays Off?” CloudFlare sees traffic for hundreds of thousands of websites and was able to answer the question. They looked at the average percentage of requests that constitute threats, graphed the deviation, and then overlaid any events happening on those days. Their conclusion: it depends on the holiday.

This got me thinking. “Wouldn’t it be interesting to see if holidays or the time of year affects code security quality?” At what time of year is the most insecure software written? During the summer? over the holiday period? during March Madness? This summer I saw a few people at the beach with their laptops, I wonder if any of them were coding?

What I looked at

My company, Veracode, sees the security quality of the thousands of applications we test. I wanted to see if there is any seasonality in code security quality. I decided to focus my analysis on applications in early stages of the development life cycle, in other words, those that were actively in development, or undergoing Alpha and Beta Testing. Early in the development process, I reasoned, there would be a relatively quick turnaround from when the code was written to when it was scanned. In other words, I’m assuming that during the early stages of a development project, code is written and scanned within the same calendar month. I looked at application size and a roll-up of the total quantity of flaws per application.

What’s Normal?

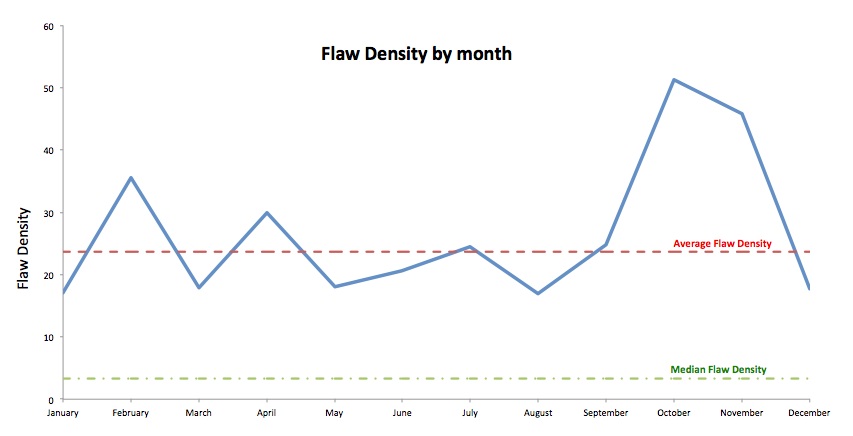

To do a comparison, you first need to know what normal looks like. Therefore I looked at the thousands of alpha and beta-stage applications Veracode scanned over the past couple of years. I saw an average flaw density of 24 flaws per megabyte of executable code and a median flaw density of 3 flaws per megabyte of executable code. If you are interested, we talk more about flaw density observations in State of Software Security Version 3.

The Results

For the time period I looked at (the last 24 months), January through September is relatively flat and in line with the average flaw density. Then, there is a big bump in flaw density in October and November. Things begin to settle down once we go into December. The jump in application flaws is easy enough to spot. But what could cause this? Some of it could be seasonal. Maybe the build up to Thanksgiving has developers distracted? Are developers adjusting after the Summer break when “the living is easy” and the roads are quiet? Fall brings the extra pressure of dropping kids at school and rushing in the evenings to pick them up after sports. There is also the added pressure to produce a high volume of code to meet end of year deadlines and releases. Scientific literature is full of studies on the effects of stress on higher cognitive activities, and its reasonable to assume that application developers, like most of us, may respond to added pressure by making more mistakes in the code they write.

What do you think? Is the quality of code development seasonal?

For more security intelligence, read the results of our latest State of Software Security report. (You can download the report here.)The report provides security intelligence derived from multiple testing methodologies (static, dynamic, and manual) on the full spectrum of application types (components, shared libraries, web, and non-web applications) and programming languages (including Java, C/C++, and .NET) from every part of the software supply chain on which organizations depend.