NEW ORLEANS – Researchers have observed the blossoming of a new type of social media nuisance they are calling Trolling-as-a-Service. They say these rabble-rousing efforts have emerged as a clever new way for hackers to launch coordinated and dangerous attacks via Facebook and Twitter.

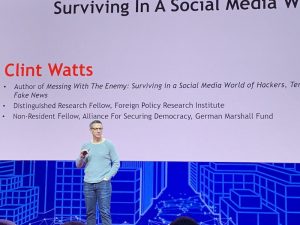

Speaking at CPX 360 on Tuesday, Clint Watts, a research fellow at the foreign policy research institute and former FBI agent, said that 10 years ago advanced persistent threat actors (APT) posed the biggest cyber threat and today’s gravest concerns come from sophisticated online “hecklers,” which he dubbed “advanced persistent manipulators” (APM).

“In 20 years we’ve gone from no information to wondering if too much information is worse,” said Watts, speaking at a Tuesday session called “Messing with the enemy: Surviving in a social media world.”

“In 2016 we saw a new era which I refer to as the rise of the trolls. These troll armies have become way more sophisticated, with mass organized hecklers working day and night,” he said.

APMs have emerged as a threat thanks to two converging social-media forces used by platforms such as Facebook, Twitter and Reddit. Those two forces are, one, individual social media users and, second, the engagement algorithms used by social media platforms.

Social media algorithms can be used to magnify a troll’s message and promote the re-sharing of a message that appeals to a niche corner of a social media platform. With effort, that message can gain acceptance to a wider audience. Watts said social media users are sometimes unwitting pawns in this game, by allowing engagement algorithms to highlight and promote APM-seeded content that users have an implicit bias towards.

Watts said targeted users are often primed with content intended to incite fear, mobilize users or draw on political narratives. Those hot-button topics are designed to gain massive amounts of attention and content interaction which in turn increases visibility of the message on platforms like Twitter and Facebook.

In doing so, APMs have historically been able to launch an array of malicious attacks, said Watts. That includes anything from “hecklers” using social media to discredit facts in order to advance their own narratives. In some cases, sophisticated hackers have managed to leverage their influence and access to those online communities as a launching pad to compromising, discrediting or defacing other accounts.

Over the past year, the threat surface of social media has continued to evolve as hackers invest more in social media as an attack surface. Trolling-as-a-Service, which is essentially disinformation for hire, has emerged as a way for attackers to launch more sophisticated campaigns. For instance, one trolls-for-hire service will create a websites and social media accounts for fake people or even a fake universities, said Watts. Clients of the “trolls for hire” can boost their sock-puppet’s persona and credibility by linking profiles to the fake institutions.

APMs are also leveraging “social media influencers” and paying them to tease a political message over and over again within their social feeds.

In the past, researchers say these efforts have been quite successful. In June 2019, an influence operation that re-posted old news about terror incidents and passed them off as if they were new was able to incite fear within vulnerable online communities. Misinformation spread via social media has also raised concerns regarding election influence campaigns. A more serious example was when the Associated Press’ twitter handle was hacked and the threat actors tweeted out “Breaking: Two Explosions in the White House and Barack Obama is Injured,” causing the stock market to plunge.

Beyond Trolling-as-a-Service, the social media space is also facing future threats aimed at stirring up misinformation. This includes deepfakes, which are fake and manipulated videos that utilize artificial intelligence to appear real, as a method to challenge reality.

There are several steps that can be taken to fight back against APMs – and governments and social media platforms need to get involved, Watts said. For instance, it’s necessary to restore trust and confidence in institutions; reinforce data and science, and refute falsehoods levied against the government.

“We also need to partner with social media companies to stop false information impacting public safety, and create information rating systems,” he said.