Months after researchers disclosed a new way to exploit Alexa and Google Home smart speakers to spy on users, those same researchers now warn that Amazon and Google have yet to create effective ways to prevent the eavesdropping hack.

The researchers who in October disclosed the “Smart Spies” hack, which enables eavesdropping, voice-phishing, or using people’s voice cues to determine passwords, told Threatpost this week that little has been done to prevent the hacks from being launched.

“Nothing has changed,” Karsten Nohl, managing director at Security Research Labs (SRLabs), told Threatpost. “And that in itself is shocking, given how many users are exposed to possible threats right now. It’s been more than five months since our disclosure and the issues persist.”

“Nothing has changed,” Karsten Nohl, managing director at Security Research Labs (SRLabs), told Threatpost. “And that in itself is shocking, given how many users are exposed to possible threats right now. It’s been more than five months since our disclosure and the issues persist.”

The Hack

The vulnerability, first disclosed months ago, lies in small apps created by developers for the devices to extend their capability called Skills for Alexa and second app called Actions on Google Home, according to a report by SRLabs. These apps “can be abused to listen in on users or vish (voice phish) their passwords,” researchers said.

The hack leverages something called “fallback intent,” which is when a voice app cannot assign the user’s most recent spoken command to any other intent and instead offers help. For Alexa users, the researchers also leveraged the built-in stop intent which reacts to the user saying “stop.”

Researchers first built a seemingly innocuous app, which was submitted to Amazon and Google review and published. After the review, researchers were able to change the functionality. Then, researchers changed the welcome message of the speakers to a fake error message, and made the voice app “say” the character sequence (in this case, that sequence was “�. ” or “U+D801, dot, space”).

“Since this sequence is unpronounceable the speaker remains silent while active. Making the app ‘say’ the characters multiple times increases the length of this silence,” researchers said.

Using this trick, researchers were able to launch various attacks, including requesting the user password by playing a phishing message after the silence or eavesdropping on users by faking the stop intent.

Vendor Response

As of Tuesday, meanwhile, researchers said that the hack still works for them (see video demonstration below).

Amazon and Google both seem to have introduced manual spot checking for phishing skills – however, this does little to prevent the malicious apps from being uploaded in the first place, Nohl said.

After several days of researchers putting a Phishing Skill/Action live, they were taken offline, he said. But, researchers were then simply able to resubmit the apps without changes and they were approved again.

“Some things have been done, but [they’re] somewhat ineffective,” Nohl told Threatpost. “If you can upload the exact same app again, after it’s been kicked out, and it gets approved again, then that doesn’t seem to be a self-learning feedback loop that is the least that we would expect so that we could hope for more accuracy and speed in the future. It also shows there’s a massive window of opportunity [for bad actors].”

Amazon, for its part, did make a few modifications to make the hacks more difficult – however, Nohl said that the mitigations are “comically ineffective.”

For instance, Amazon took steps to prevent hackers from overwriting the “stop” intend, but Nohl said that it appears a glitch now allows a hacker-supplied stop intend to exist in parallel to the original one. That means the stop intend can be overwritten by hackers about 50 percent of the time he said.

Amazon also took steps to block the command used to block the character sequence (U+D801) that causes Alexa devices to fall silent as part of the hack – but dozens of other unpronounceable characters still remain that could also be used for this trick, so blocking just one of them has little effect, Nohl said.

Security Research Labs said they are continuing responsible disclosure with Google and Amazon.

“Customer trust is important to us,” an Amazon spokesperson told Threatpost. “We have mitigations in place to detect this type of skill behavior and reject or take them down when identified. SR Labs contacted us late last week with new research and skills they developed, which we identified and blocked. We are currently reviewing the research, but can confirm we identified and took down all the new phishing skills before they were reported to us using our existing mitigations and monitoring tools. We will develop any necessary additional mitigations following our review.”

Google did not respond to a request for comment from Threatpost.

Ultimately, Amazon and Google need to focus on weeding the malicious skills from the get-go, rather than after they are already live, Nohl stressed.

“It seems that the apps aren’t filtered by potential malicious behavior; they’re filtered by actual abusive behavior, but days later, and you can easily re-upload the app… that’s just not good enough,” said Nohl.

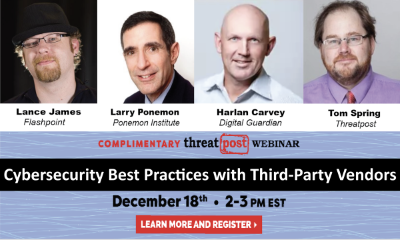

Free Threatpost Webinar: Risk around third-party vendors is real and can lead to data disasters. We rely on third-party vendors, but that doesn’t mean forfeiting security. Join us on Dec. 18th at 2 pm EST as Threatpost looks at managing third-party relationship risks with industry experts Dr. Larry Ponemon, of Ponemon Institute; Harlan Carvey, with Digital Guardian and Flashpoint’s Lance James. Click here to register.