In the first known case of successful financial scamming via audio deep fakes, cybercrooks were able to create a near-perfect impersonation of a chief executive’s voice – and then used the audio to fool his company into transferring $243,000 to their bank account.

A deep fake is a plausible video or audio impersonation of someone, powered by artificial intelligence (AI). Security experts say that the incident, first reported by the Wall Street Journal, sets a dangerous precedent.

“In the identity-verification industry, we’re seeing more and more artificial intelligence-based identity fraud than ever before,” David Thomas, CEO of identity verification company Evident, told Threatpost. “As a business, it’s no longer enough to just trust that someone is who they say they are. Individuals and businesses are just now beginning to understand how important identity verification is. Especially in the new era of deep fakes, it’s no longer just enough to trust a phone call or a video file.”

The WSJ’s report, which went live over the weekend, was attributed to the victim company’s insurance firm, Euler Hermes Group SA, which declined to name the impacted company but outlined the incident in detail.

The incident kicked off in March, when the CEO of an energy company thought he was speaking via phone to his boss, the chief executive of the firm’s German parent company. The caller on the phone asked the CEO to send the funds – totaling €220,000, or $243,000, to a Hungarian supplier in an “urgent” request, with the promise that it would be reimbursed.

The victim, deceived into thinking that the voice was that of his boss – particularly because it had a similar slight German accent and voice pattern – made the transfer. However, once the transaction went through, the fraudsters called back, asking for another urgent money transfer. At that point, the CEO became suspicious and refused to make the payment.

The funds reportedly went from Hungary to Mexico before being transferred to other locations. Euler Hermes Group SA was able to reimburse the affected company, according to the report.

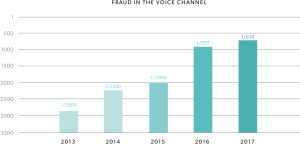

The incident points to how voice fraud, powered by artificial intelligence, is a burgeoning cybersecurity threat to enterprises and consumers alike. A 2018 report by Pindrop found that voice fraud had jumped 350 percent from 2013 to 2017 – with one in 638 calls synthetically created.

While the cybersecurity industry has touted AI as a way for developers to automate functions and for enterprises to sniff out anomolies, this incident also shows how the technology could easily be used maliciously.

While the cybersecurity industry has touted AI as a way for developers to automate functions and for enterprises to sniff out anomolies, this incident also shows how the technology could easily be used maliciously.

In fact, after creating a replica of popular podcaster Joe Rogan’s voice (generating life-like speech using only text inputs), Dessa, a company that offers enterprise-grade tools for machine-learning engineering, warned that anyone could utilize AI to impersonate people. That means spam callers could impersonate victims’ family members to obtain personal information; criminals could gain entrance to high-security clearance areas through impersonating a government official; or deep fakes of politicians could be used to manipulate election results, said Dessa in a May post.

“Right now, technical expertise, ingenuity, computing power and data are required to make models like [these] perform well,” said Dessa. “So not just anyone can go out and do it. But in the next few years (or even sooner), we’ll see the technology advance to the point where only a few seconds of audio are needed to create a life-like replica of anyone’s voice on the planet. It’s pretty… scary.”

Security experts have warned that AI is also a boon for cybercriminals who may use the tool to automate phishing, use voice authentication AI applications for spoofing attacks, or for scalable packet sniffing. Luckily, verification techniques do exist that could help flag such fraud attempts, said Evident’s Thomas.

“Businesses must be vigilant and employ proper verification techniques – like multi-factor identification, facial recognition and comprehensive identity proofing – to thwart today’s AI threats,” Thomas told Threatpost.