It turns out the best way to get people to pay attention to those malware warnings that pop up in browsers may be to stop tweaking them, scrap them entirely and rebuild from scratch. According to a study on the subject published last week, efficient malware warnings shouldn’t scare users away, they should give a clear and concise idea of what is happening and how much risk users are exposing themselves to.

It turns out the best way to get people to pay attention to those malware warnings that pop up in browsers may be to stop tweaking them, scrap them entirely and rebuild from scratch. According to a study on the subject published last week, efficient malware warnings shouldn’t scare users away, they should give a clear and concise idea of what is happening and how much risk users are exposing themselves to.

It’s already well documented that the average computer user largely ignores the warnings, but new research is trying to determine just how browser architects and information technology specialists can create more effective warnings going forward.

Ross Anderson, the Head of Cryptography at Cambridge University and David Modic, a research associate at the school’s Computer Laboratory used psychology last year to find their answer. The duo’s research, a 31-page document “Reading This May Harm Your Computer: The Psychology of Malware Warnings,” was released Friday.

“We’re constantly bombarded with warnings designed to cover someone else’s back, but what sort of text should we put in a warning if we actually want the user to pay attention to it?” Anderson asked in a post on his blog Light Blue Touchpaper last week accompanying the study.

The biggest problem the researchers found regarding malware warnings is that everyday users would ignore them if they could. The two cite a handful of previous studies, including ones that look at the length, frequency, and technicality of warnings but point out that “daily exposure to an overwhelming amount of warnings” remains an issue.

People continue to have a hard time separating real threats from inconvenient, online warnings.

Anderson and Modic argue a way to fix the warnings is to change the narrative.

“There is a need for fewer but more effective malware warnings… particularly in browsers,” the paper reads, reasoning that the way certain warnings are worded is key to getting users to pay attention to them.

To address this Anderson and Modic took some of the same social psychological factors that scammers have used on victims over the years and tried to apply them to browser warnings.

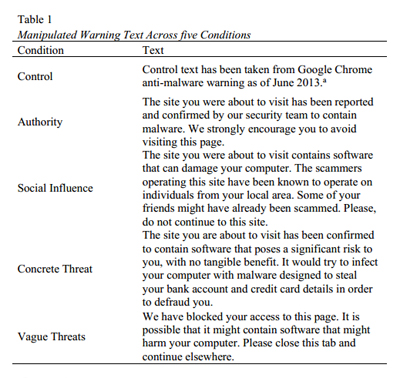

As part of their experiment, the researchers presented more than 500 men and women with variations of a Google Chrome warning, each one (See table, right) incorporated one of the following angles:

- Influence of authority

- Social influence

- Concrete threats

- Vague threats

Anderson described in his blog which condition gave the best results:

“What works best is to make the warning concrete; people ignore general warnings such as that a web page ‘might harm your computer,’ but do pay attention to a specific one such as that the page would ‘try to infect your computer with malware designed to steal your bank account and credit card details in order to defraud you.'”

On the whole, respondents heeded malware warnings regardless of what they said, but as Anderson and Modic expected, users heeded the warnings more so when they featured authority and concrete threat-based warnings.

“Warning text should include a clear and non-technical description of potential negative outcome or an informed direct warning given from a position of authority,” the researchers ultimately deduced.

Concrete threats – when individuals have a clear idea of what is happening and how much they are exposing themselves – wound up being the No. 1 predictor of click-through resistance.

The experiment found that authority – when the warnings come from trusted sources – was the No. 2 predictor. Trusted figures “elicit compliance” and in the study, can even extend to include Facebook friends.

“Respondents also indicated that they were more likely to click through [warnings] if their friends or Facebook friends told them it was safe to do. Facebook friends thus appear to have more sway on the decision to click through,” they said.

In some cases, these findings could be cause for concern, especially given the number of viral phishing campaigns that have leveraged Facebook over the past several years. Still though, Modic and Anderson give credence to social media, reasoning friends on Facebook may “carry more informative power than regular ones.”

Modic and Anderson made a handful of other observations from their experiment that tie into the idea of overhauling malware warnings.

Nine out of every 10 respondents kept their warnings turned on and only one out of every 10 claimed they wanted to turn theirs off, they were just unsure of how to do it. While none of this is exactly concerning, it does speak to a tiresome status quo. Users could be getting used to seeing the same, static malware warning.

As is to be expected, those more familiar with computers kept their warnings on, but those who did turn theirs off did so because they generally ignore malware warnings and requests from their computers in general.

“The inability to understand the warnings was another significant predictor of turning the malware warnings off. We might infer that the language in existing warnings is not as clear as it could be,” the study asserts.

The research calls back to a few similar studies of late, including one released last summer by Google’s Adrienne Porter Felt and UC Berkeley’s Devdatta Akhawe.

In that study, on the whole, users mostly paid attention to the warnings they saw, only clicking through malware and phishing warnings they saw 25 percent of the time.