The proliferation of wearable devices coupled with smartphone apps that monitor heart rates and other health metrics raises an important question: How exactly should the information generated by these devices be regulated? If there’s a fist fight in a bar can a person’s Fitbit accelerator be subpoenaed? How much user-manufactured data can companies share or integrate into advertising?

Experts discussed the future of consumer generated health information and its privacy implications in a Federal Trade Commission-led panel Wednesday morning. The seminar, held at the FTC’s office in Washington, D.C., concluded the agency’s season-long privacy series.

The obvious problem here, as FTC Commissioner Julie Brill pointed out during the morning’s welcoming remarks, is that it’s a double-edged sword. The consumer information being gathered by these devices is definitely beneficial – it encourages individuals to eat better, exercise and helps users monitor the health of family. Yet the data is mostly stored outside of the Health Insurance Portability and Accountability Act (HIPAA) silo and not controlled by doctors, hospitals or insurers.

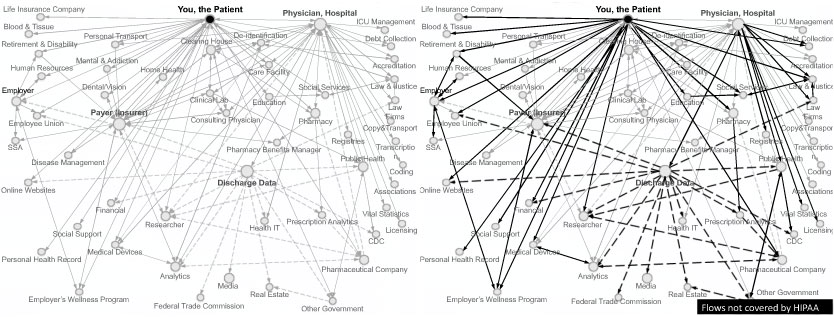

More information than users may realize is free from HIPAA’s regulatory regime. According to statistics tallied by TheDataMap.org, a Harvard-led research site, around half of the medical information that winds up getting shared by patients isn’t covered by the act.

“I’m not saying that individuals shouldn’t share this info,” Latanya Sweeney, the FTC’s Chief Technologist said while describing the map on Wednesday, “but the goal should be to figure out what the risks are and jointly move forward.”

As Joy Pritts, the Chief Privacy Officer at the NHS’ Office of the National Coordinator for Health Information Technology Privacy pointed out, privacy in the healthcare realm has always been sector-specific, which sort of makes it an uphill battle. The concept was never a primary concern since HIPAA mainly impacted health providers and healthcare clearing houses.

“HIPAA really generated from a movement to standardize claims data. It wasn’t really about privacy at all originally,” Pritts acknowledged. “Privacy was included as a protection, but the focus was on simply the administration of health claims and how they were processed.”

While the American Medical Association and the American Medical Informatics Association both have a smattering of self-regulatory guidelines for how they electronically communicate with patients, there are very few regulatory efforts when it comes to consumer-generated health data and how companies can share that data for marketing purposes.

“There’s nothing that I know of,” Joseph Lorenzo Hall, the Chief Technologist for the Center for Democracy & Technology said when asked if there was some sort of overarching reform, “I would love to be proven wrong, I think the time has come.”

Hall went on to say that the Department of Commerce has something along these lines, the National Telecommunications & information Administration’s Mobile App Transparency Code of Conduct which requires very clear, short form disclosure when it comes to collecting user’s medical information. Hall added that the Digital Advertising Alliance and the Network Advertising Initiative also have a tool that requires explicit consent before it collects user information they can use for behavioral advertising.

While these lay some groundwork, there’s no generic, all-encompassing mandate on companies and their apps that help users generate health data.

“Transparency establishes trust,” Sally Okun, the Vice President for Advocacy, Policy and Patient Safety for the app PatientsLikeMe, said during the panel.

PatientsLikeMe, a Cambridge, Mass.-based medical social networking app allows users with similar ailments to find and confer with one another.

Okun points out that the company’s app makes what it does as transparent as possible in its user agreement. The app takes patient information, de-identifies it and hands that information off to pharmaceutical companies and clinical researchers. At the same time it also encourages users not to use their real names and to be careful with what they’re sharing. Users don’t have to use pictures of themselves on the app but when they do, it’s not something the company promotes, it recognizes it as the choice the consumer has made.

“I think we need to find a way of unpacking some of the ways we can make it easier for patients to share this kind of information without necessarily compromising their privacy to the best degree possible,” Okun said.

“Recognizing that when you’re on the internet your privacy is subject to being revealed and that’s not something any of us can fully protect but when consumers are aware of that in the most explicit and transparent way, I think we actually elevate their willingness and their appreciation of why sharing health data can be actually quite beneficial.”

While the panelists argued that policy clinicians need to catch up with consumer-generated data to make it more respected but also not have it overload their work flow, some of them also believed that responsibility should be shared by the app creators.

“I do think we need to start holding a higher level of accountability around the use of apps and things that are sending data to places that may not be necessarily in our best interest,” Okun said, “Until we do that, I think we need to be much more aware of opting in or opting out when safety or access to our information might be at risk.”

One idea that was bandied about during the panel was to have app developers implement some sort of real-time notification when an app is about to disclose potentially sensitive health information.

“It could say: ‘This app is trying to access your medical record…’ that could be really neat,” Christopher Burrow, M.D., the EVP of Medical Affairs at the health IT firm Humetrix, said.

One of Humetrix’s apps, Blue Button, allows patients to send health information to their doctors and does something along those lines. The application sends medical data to health providers’ iPads via a one-time cryptographic key, but still gives patients a pop-up warning. That alert informs the user their information is about to be sent off and urges them to verify that it’s being sent to the right physician.

Having that kind of functionality on a universal level could be a big win for the industry, Hall reasoned.

“We want people to make cool stuff but we also don’t want to keep on having these common failures,” Hall said of the healthcare app world, “We want to have something that’s sort of embedded into how these tools are created.”

A study by the Pew Internet Research Project last year showed that 60 percent of adults in the U.S. look for health information online and about half of them divulge sensitive information about themselves in the process. As technology continues to adapt to patients – apps that upload your weight, daily exercise habits, and incorporate medicinal reminders are commonplace these days – ultimately the panelists believe that protecting user privacy should be built into these apps and devices.